|

Article ID: 1571

Last updated: 18 Jan, 2018

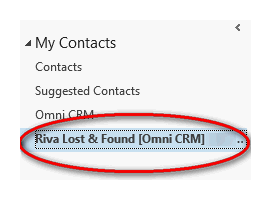

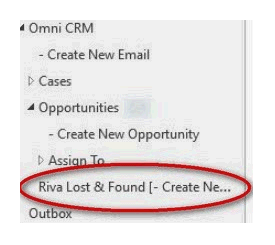

As you may already be aware, on the afternoon of October 26th 2016, Riva Cloud experienced an unplanned maintenance window. Ensuring data quality, secure communication and overall reliability of the Riva Cloud platform is our top priority. We sincerely apologize for the impact that this incident may have caused your business. We take this issue seriously. Our top priorities have been to ensure we clearly communicate the issue status via the system maintenance status page and to investigate the root cause. The full root cause of this issue, as well as information on the service maintenance window, is outlined below. SummarySix Riva Cloud processing pods (na5, na6, na7, na8, na9, na10) were taken offline by the Riva Cloud Operations Team. During this time, the management portal was placed into a system maintenance mode to allow our teams to identify, isolate, recover and restore service. All dedicated instances and other Riva Cloud processing pods were unaffected during the outage, although the web portal for these pods was also deactivated as a precautionary measure. The system maintenance status with a detailed time line was updated every 20-30 minutes during the unplanned maintenance window to ensure all parties are up to date on the activities being taken by our team. Be assured, this issue is not related to any security or vulnerability of Riva Cloud - at no point was there a security risk to the platform. The concerns were purely those based on data quality and integrity. BackgroundIn order to synchronize, Riva persists certain minimum types of information for core functionality and performance improvement. Some of this information includes data fields like the unique record database ID, modification date time stamps, and item change revisions. This information is kept in persistent storage unique to each user. This persistent storage is referred to as the transaction database or as metadata. Root Cause AnalysisOn October 26, 2016 at 3:41 p.m. MT (UTC-0600), an operating system IO failure caused 4 storage volumes to be unmounted. The team was quickly alerted to this and was able to disable the synchronization services within minutes. By 3:50 p.m. all affected synchronization processes had been terminated. When the storage volumes were unmounted in the midst of processing, all active synchronization cycles entered an inconsistent state resulting in processing errors because access to the previously available databases disappeared. Any queued synchronization cycles started as they would normally, however, when they started, the paths to where the storage volumes were once mapped were now empty folders. Depending on the state of the individual synchronization and the configuration, some synchronizations aborted, others continued processing. For the synchronization cycles that continued processing, the empty paths were treated by Riva as though the users had never synchronized before - since the users were missing their transactions the systems initiated a “First Sync”. For those synchronization cycles where a “First Sync” occurred, any mailbox item, with a category set to the same value as the synchronization policy, would have been moved by Riva to a new "Lost & Found" sub-folder to avoid creating unwanted or duplicate records during this first synchronization. Once the team was confident that the underlying issue was identified and that it was limited to the POD’s where it was initially detected, focus was shifted to understanding the impact and number of affected synchronization cycle. No two synchronization cycles are the same and no two data sets are the same. Each active and queue synchronization was manually reviewed and verified. As of October 27, 2016 at 1:00 a.m. MT (UTC-0600), all synchronization services have been restored except for those customers directly affected during the execution window when the synchronization was running against the unmounted storage volumes. A total of 71 customers have been immediately identified as having been directly affected by this “First Sync” anomaly. A full timeline and breakdown of the events is available via the Riva Cloud Knowledge Base. Impact for Affected CustomersFor mailboxes that were impacted by this issue, end-users will find a "Lost & Found" sub-folder with the October 26th date in their Address Book, Calendar, Task Lists and Assign To folders.

In order to automate any remediation, it is best and ideal that end-users not MOVE or DELETE any calendar entries until appropriate remediation steps can be taken. All synchronization policies for affected customers have been disabled intentionally. If you have not already been contacted by the Riva Success Team and you see a "Lost & Found" folder with October 26th's date listed, then please contact us to begin remediation steps. To determine if you are syncing on a affected pod (na5, na6, na7, na8, na9, na10), please see the following Knowledge base article on how to confirm your Riva Cloud Synchronization Pod and Mode. Action PlanAs a result of this issue and the remediation steps required to restore all customers, we are taking the following actions,

In ClosingWe are continuously monitoring all systems to ensure the initial cause of the issue does not recur. We realize your successful daily customer interactions, sales and support processes are dependent on a trusted system providing reliable, secure and high-quality processing – this is our top priority. We sincerely apologize for any impact these disruptions may have caused to your business and appreciate your continued trust as we continue to improve our technology, processes and services. If there are any outstanding questions or you have been affected by this issue, please do not hesitate to contact us. Sincerely, Riva Cloud Operations and Success Teams TimelineTechnical DetailsNote: All times are in Mountain Time (UTC-0600) Thursday, October 27 2016

Wednesday, October 26 2016

Wednesday, October 26 2016

Article ID: 1571

Last updated: 18 Jan, 2018

Revision: 7

Views: 3629

|